Wrapper for Siri Speech Recognition using iOS and Swift 3.0

Apple introduced the Speech framework, an useful API for speech recognition for iOS 10 in 2016. It is one of the few things to get fascinated by the new iOS release. Earlier I saw this amazing video on Realm blog. It was the source of main motivation for writing this wrapper and the blog post.

Speech Recognition Library

This repository represents the pluggable library to use iOS speech recognition service in any arbitrary iOS application running iOS 10.

Please follow the steps below before directly integrating it into your applications. Although library is straightforward to use, there are caveats that need to be addressed before the actual use

Step 1:

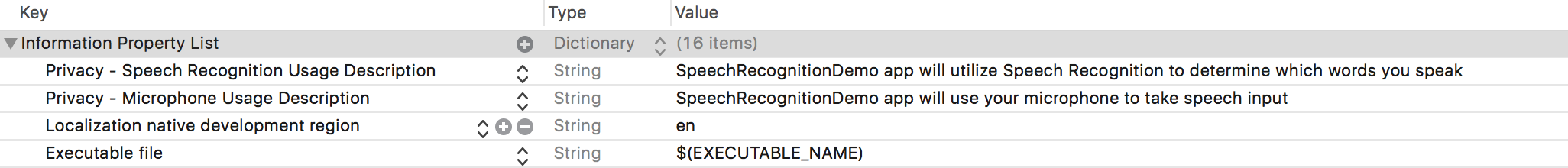

First step is getting permissions from user. Add required keys to plist file. In order to use speech recognition, you will need to add following two keys to plist file.

One key is used for microphone access and other for taking user speech or words as an input in the application. You will need to add these keys at the top level of plist inside main <dict> tag

<key>NSSpeechRecognitionUsageDescription</key>

<string>SpeechRecognitionDemo app will utilize Speech Recognition to determine which words you speak</string>

<key>NSMicrophoneUsageDescription</key>

<string>SpeechRecognitionDemo app will use your microphone to take speech input</string>

Step 2:

Initializing SpeechRecognitionUtility

In order to use library, you will first have to initialize an object of type SpeechRecognitionUtility.

Example:

if speechRecognizerUtility == nil {

// Initialize the speech recognition utility here

speechRecognizerUtility = SpeechRecognitionUtility(speechRecognitionAuthorizedBlock: { [weak self] in

self?.toggleSpeechRecognitionState()

}, stateUpdateBlock: { [weak self] (currentSpeechRecognitionState, finalOutput) in

// A block to update the status of speech recognition. This block will get called every time Speech framework recognizes the speech input

self?.stateChangedWithNew(state: currentSpeechRecognitionState)

// We won't perform translation until final input is ready. We will usually wait for users to finish speaking their input until translation request is sent

if finalOutput {

self?.toggleSpeechRecognitionState()

self?.speechRecognitionDone()

}

}, timeoutPeriod: 1.5)

// We will set the Speech recognition Timeout to make sure we get the full string output once user has stopped talking. For example, if we specify timeout as 2 seconds. User initiates speech recognition, speaks continuously (Hopefully less than full one minute), and if pauses for more than 2 seconds, value of finalOutput in above block will be true. Before that you will keep getting output, but the output won't be the final.

} else {

// We will call this method to toggle the state on/off speech recognition operation.

self.toggleSpeechRecognitionState()

}

Initializer will take following parameters as input

-

speechRecognitionAuthorizedBlock- This will be called when user authorizes app to use device microphone and allows an access to speech recognizer utility. This way app can capture what user has said and use it for further processing -

stateUpdateBlock- This will be called every timeSpeechRecognitionUtilitychanges state. Possible states included are, but not limited tospeechRecognised(String),speechNotRecognized,authorized,audioEngineStart. Please refer to the official Apple documentation for the list of all possible states. Client app can take respective actions based on the current state change indicated bySpeechRecognitionUtilityobject -

timeoutPeriod- This is the new parameter added to the latest version of library. When the user is dictating something, we do not want to process every word continuously. This can affect the battery life as well as it's difficult to get context of what user wants to say with partial input.What

timeoutPeriodparameter does is, it will continue taking user input, aggregate it and in thestateUpdateBlockupdate thefinalOutputflag. Unless there is a pause greater thantimeoutPeriod,finalOutputflag will be false. Once it is true, you can assume that user has stopped providing input and then proceed to use the dictated string for further processing. The defaulttimeoutPeriodis set to1.5seconds. Which means, if user, while dictating, pauses for more than1.5seconds, we will proceed with the text that has been detected so far.

Step 3:

To perform toggle between speech recognition activity, you can simply make a call to following method on SpeechRecognitionUtility

try self.speechRecognizerUtility?.toggleSpeechRecognitionActivity()

What this method will do is, it will switch the speech recognition activity on and off. Since this method throws an error, it is required to put it in do-catch block.

do {

try self.speechRecognizerUtility?.toggleSpeechRecognitionActivity()

} catch {

print("Error")

}

Certain instances where you want to make call to this method are

-

When

SpeechRecognitionUtilityobject is initialized and user has granted required authorizations to perform necessary action, you can toggle the utility on -

When user wants to stop speech recognition

-

When utility detects the end of speech recognition operation based on the

timeoutPeriodspecified during initialization and value offinalOutput, you can stop the utility and start processing an output -

As mentioned in the previous step, if you are done processing previous output, you can again call the

togglemethod toswitch-onthe speech recognition routine

For reference, list of possible throwable errors (During speech recognition) could be, but are not limited to

- denied

- notDetermined

- restricted

- inputNodeUnavailable

- audioSessionUnavailable

Please refer to the library for list of all possible errors that could be thrown from the function.

Also note the significance of stateUpdateBlock as a part of initializer requirement. Every time speech recognizer utility changes state, this block is a way to notify client about current status. User can then take suitable actions based on the ongoing state. For example, we can detect speech detect event with the following state check

speechRecognizerUtility = SpeechRecognitionUtility(speechRecognitionAuthorizedBlock: { [weak self] in

// blah

}, stateUpdateBlock: { (currentSpeechRecognitionState) in

// Speech recognized state

switch state {

case .speechRecognised(let recognizedString):

print("Recognized String \(recognizedString)")

}

}, timeoutPeriod: 1.5)

Possible states are, but are not limited to

- speechRecognised(String)

- speechNotRecognized

- authorized

- audioEngineStart

- audioEngineStop

- availabilityChanged(Bool)

Library also includes a sample project which demonstrates the usage of library with applicable error conditions and system state capture. (Please make sure to use

developbranch from the GitHub repository)

Update:

One thing I previously missed in this post was how to handle all possible transcriptions provided and not just the best transcription.

I have added a required code on GitHub repository which hosts this utility on the

developbranch

To do this, you will have to grab the result provided by block

speechRecognizer.recognitionTask(with: recognitionRequest, resultHandler: { [weak self] (result, error) in {

//.....

}

And then go over transcriptions object and collect segments associated with each of them. iOS also provides confidence level associated with each segment which will help us to get total confidence level for individual transcription by adding up the confidence associated with each of them

// A variable to keep track of maximum confidence level

var maximumConfidenceLevel: Float = 0.0

// Default value of bestTranscription if first object from transcriptions array

var bestTranscription = result?.transcriptions.first

if let transcriptions = result?.transcriptions {

for transcription in transcriptions {

// We will set the total confidence value for current transcription and use the transcription with maximum value of total confidence level.

var totalConfidenceValue: Float = 0.0

for segment in transcription.segments {

totalConfidenceValue = totalConfidenceValue + segment.confidence

}

// Check if current value of totalConfidenceValue is greater than maximumConfidenceLevel previously set

if totalConfidenceValue > maximumConfidenceLevel {

bestTranscription = transcription

maximumConfidenceLevel = totalConfidenceValue

}

}

}

// Ideally this value should be equal to readymade value provided by iOS which is, result?.bestTranscription.formattedString

print("Best Transcription is \(bestTranscription?.formattedString ?? "")")

I hope this library will be useful to you while implementing speech recognition into the app. It has only one file written in Swift 3.0 so adding it in the project should be as simple as just dragging and dropping the file which houses the code for speech recognition utility. Please let me know if you have further questions on usage or utility of this library.

Update:

I noticed later that I didn't add description on how you can avoid getting feedback for partial results and can rather choose to have one result at the end of operation.

To do this, you will have to set shouldReportPartialResults property on the SFSpeechAudioBufferRecognitionRequest as false while setting up the Speech recognition task

recognitionRequest.shouldReportPartialResults = false

This line will make sure that the callback

speechRecognizer?.recognitionTask(with: recognitionRequest, resultHandler: { [weak self] (result, error) in

won't be called every time when speech is recognized by the Speech recognition task. This however requires user action to prompt app that speech has been completed. To achieve that, you can start the speech recognition operation and have a button in app which might say "I am done/Search keyword/Do some action on said words".

When user presses this button, you will have to call finish() method on SFSpeechRecognitionTask object

recognitionTask.finish()

This will trigger the following callback and you'll get the whole string that was provided to an app during the speech

.

recognitionTask = speechRecognizer?.recognitionTask(with: recognitionRequest, resultHandler: { [weak self] (result, error) in

if result != nil {

if let recognizedSpeechString = result?.bestTranscription.formattedString {

print("Full recognized text is \(recognizedSpeechString)")

}

}

Just so you know this is an alternative approach for performance speeding in case you don't want to burden your device frequently every time speech is recognized. Whichever way you prefer depends on the use-case and intended UX for end user

Few things to note:

-

Speech recognition utility is available only for versions starting with iOS 10. It makes this library unusable for apps targetting iOS versions lower than 10.0

-

As current situation necessitates, this library is written in Swift 3.0. If you are planning to integrate in your project, make sure that project uses Swift 3.0 syntax or change the library to use Swift 2.x syntax

-

You might also want to use visual cues to give user an indication on which state the app is in. The sample app added in above repository on

developbranch uses extreme approach by changing text and background color depending on which state the app is in -

It is fancy and you can also include additional machine learning algorithms to infer what user has dictated. For example, user can ask app that supports multilingual translations to translate input into specific language

You can find the full source code Here with detailed readme on usage and description of wrapper

References and Further reading:

WWDC 2016 - Session 509

AppCoda Speech recognition tutorial

Speech recognition with Swift in iOS 10

Implementing Speech Recognition in Your App